Building a Q-Learning Agent for Game Theory with Argo Workflows

Introduction

In this post, I'll explain how I built a Q-learning agent that learns to play the Prisoner's Dilemma using Argo Workflows. The project demonstrates how to train an AI to make strategic decisions in a classic game theory scenario leveraging Kubernetes and Python.

Project Overview

The repository contains a complete implementation of:

- A Q-learning agent that learns optimal strategies

- Multiple opponent strategies (Random, Tit-for-Tat, Always Cooperate, Always Defect)

- A containerized training and evaluation pipeline

- Argo Workflow definitions for orchestration

The Prisoner's Dilemma

The Prisoner's Dilemma is a fundamental game theory problem where two players must choose to either cooperate (C) or defect (D). The payoff matrix used in this implementation is:

PAYOFF_MATRIX = {

('C', 'C'): (3, 3), # Both cooperate

('C', 'D'): (0, 5), # Player 1 cooperates, Player 2 defects

('D', 'C'): (5, 0), # Player 1 defects, Player 2 cooperates

('D', 'D'): (1, 1) # Both defect

}Implementation Details

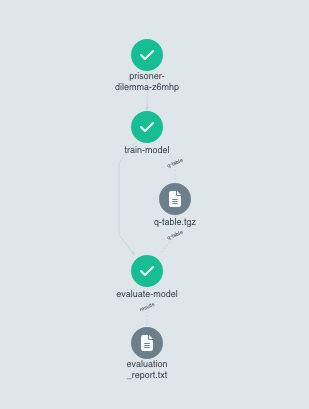

Argo Workflows

I implemented the pipeline using Argo workflows. The machine learning workflow is relatively simple and consists of two stages, storing artifacts in S3. Below is a screenshot of the workflow in the Argo dashboard:

Training Module

The training module (train.py) implements Q-learning with the following key components:

- Epsilon-greedy exploration strategy

- Configurable learning parameters (alpha, gamma, epsilon)

- Support for different opponent strategies

Reference to training implementation:

def train_q_learning(strategy_type, cooperate_prob, episodes=5000):

user = UserStrategy(strategy_type, cooperate_prob)

q_table = {}

alpha = 0.1 # Learning rate

gamma = 0.95 # Discount factor

epsilon = 0.1 # Exploration rate

for episode in range(episodes):

state = ('Start', 'Start')

total_reward = 0

for _ in range(20): # 20 rounds per episode

# Epsilon-greedy action selection

if state not in q_table:

q_table[state] = {'C': 0, 'D': 0}

if np.random.random() < epsilon:

ai_action = np.random.choice(['C', 'D']) # Explore

else:

ai_action = max(q_table[state], key=q_table[state].get) # Exploit

user_action = user.move()

reward = PAYOFF_MATRIX[(ai_action, user_action)][0]

# Update Q-table

next_state = (ai_action, user_action)

if next_state not in q_table:

q_table[next_state] = {'C': 0, 'D': 0}

q_table[state][ai_action] += alpha * (

reward + gamma * max(q_table[next_state].values()) - q_table[state][ai_action]

)

total_reward += reward

state = next_state

user.history.append((ai_action, user_action))

return q_tableEvaluation Module

The evaluation module provides detailed performance metrics, including:

- Average score per round

- Cooperation rates

- Outcome distribution

- Strategy analysis

- Recommendations for improvement

Reference to evaluation metrics:

# Build report

report = []

# Evaluation Report

report.append('')

report.append(f"{' Evaluation Report ':=^50}")

report.append(f"▪ Against strategy: {strategy_type.capitalize()}")

if strategy_type == 'random':

report.append(f"▪ User cooperation probability: {cooperate_prob:.1%}")

report.append(f"▪ Rounds played: {rounds}")

# Performance Metrics

report.append('')

report.append(f"{' Performance Metrics ':-^50}")

report.append(f"▪ Average score per round: {avg_score:.2f}")

report.append(f"▪ AI cooperation rate: {cooperation_rate:.1%}")

report.append(f"▪ User cooperation rate: {stats['user_actions']['C']/rounds:.1%}")

# Outcome Distribution

report.append('')

report.append(f"{' Outcome Distribution ':-^50}")

outcome_line = ' '.join([f"{ai}/{user}: {count/rounds:.1%}" for (ai, user), count in stats['outcomes'].items()])

report.append(outcome_line)

# Strategy Analysis

report.append('')

report.append(f"{' Strategy Analysis ':-^50}")

if avg_score >= 4.5:

report.append("▪ AI strategy: Ruthless exploiter (always defects when possible)")

elif avg_score >= 3.0:

report.append("▪ AI strategy: Balanced approach (mix of cooperation/defection)")

elif avg_score >= 2.0:

report.append("▪ AI strategy: Cooperative tendency")

else:

report.append("▪ AI strategy: Overly passive (needs improvement)")

# Theoretical Benchmarks

report.append('')

report.append(f"{' Theoretical Benchmarks ':-^50}")

report.append("▪ Mutual cooperation: 3.00")

report.append("▪ Optimal exploitation: 5.00")

report.append("▪ Mutual defection: 1.00")

report.append("▪ Random baseline (~0.5): ~2.50")

# Recommendations

report.append('')

report.append(f"{' Recommendations ':-^50}")

if avg_score < 2.5:

report.append("▪ Increase training episodes")

report.append("▪ Reduce exploration rate (epsilon)")

report.append("▪ Adjust learning rate parameters")

elif avg_score < 3.5:

report.append("▪ Moderate performance - try:")

report.append(" ▪ Increase discount factor (gamma)")

report.append(" ▪ Add reward shaping")

else:

report.append("▪ Excellent performance - consider:")

report.append(" ▪ Testing against more complex strategies")

report.append(" ▪ Implementing probabilistic strategies")

Containerization and Workflow

The project is containerized using Docker and orchestrated with Argo Workflows. The workflow consists of two main steps:

- Training the Q-learning agent

- Evaluating its performance

Reference to workflow definition:

templates:

- name: main

dag:

tasks:

- name: train-model

template: train-model

arguments:

parameters:

- name: strategy_type

value: "{{workflow.parameters.strategy_type}}"

- name: cooperate_prob

value: "{{workflow.parameters.cooperate_prob}}"

- name: evaluate-model

template: evaluate-model

depends: train-model

arguments:

parameters:

- name: strategy_type

value: "{{workflow.parameters.strategy_type}}"

- name: cooperate_prob

value: "{{workflow.parameters.cooperate_prob}}"

artifacts:

- name: q-table

from: "{{tasks.train-model.outputs.artifacts.q-table}}"

Running the Project

To run the project, you need:

- A Kubernetes cluster with Argo Workflows installed

- The Docker image (available at danielboothcloud/prisoners-dilemma)

You can execute the workflow with different strategies:

argo submit workflow.yaml -p strategy_type=titfortat

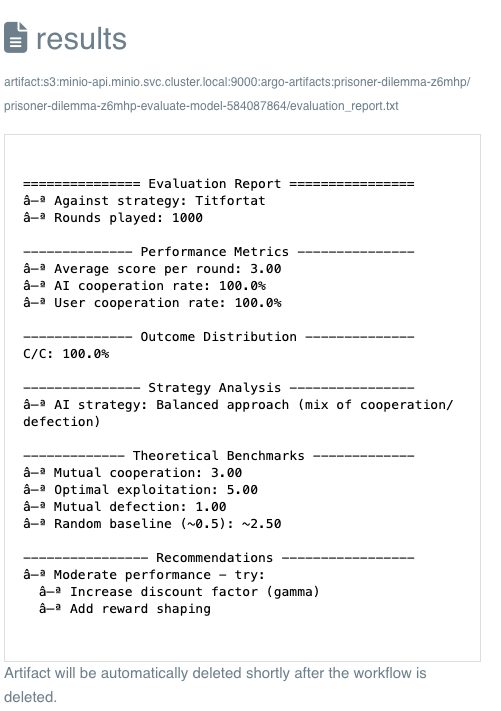

argo submit workflow.yaml -p strategy_type=random -p cooperate_prob=0.7Results and Analysis

The evaluation produces detailed reports showing the following:

- Performance against different strategies

- AI cooperation rates

- Strategy analysis

- Recommendations for improvement

For example, against Tit-for-Tat, the AI typically learns to cooperate, achieving scores close to the optimal cooperation value of 3.0.

Conclusion

This project demonstrates how to:

- Implement Q-learning for strategic decision-making

- Containerize ML training pipelines

- Orchestrate ML workflows with Argo

- Evaluate and analyze AI behaviour in game theory scenarios

The complete code is available on my GitHub. You can experiment with different parameters and strategies to see how the AI adapts its behaviour.

Future Improvements

Potential enhancements could include:

- Implementation of more complex strategies

- Extended state representations

- Support for multi-agent learning scenarios